Introduction: Navigating the Security Landscape in the AI Era

In the era of Artificial Intelligence (AI), security concerns have become paramount. AI systems, with their intricate architecture and interconnectedness, face heightened risks that demand immediate attention. While countering ‘Data Bias‘ remains a crucial challenge, AI security stands as a pressing issue for architects, engineers, and organizations alike. Threats such as Data Poisoning, Model Extraction, and Customized Adversarial Attacks pose a significant threat to the integrity and viability of AI systems. Failure to address these vulnerabilities could have severe consequences, ranging from financial losses to reputational damage and even physical harm.

AI security is not just a recommendation—it’s a red flag waving in the face of every organization. The importance of AI security extends beyond protecting individual systems; it is crucial for building trust and ensuring the widespread adoption of AI technologies. As AI becomes increasingly integrated into our lives, it is essential that we can trust these systems to operate safely and securely. By prioritizing AI security, we can pave the way for a future where AI empowers humanity rather than endangering it.”

Understanding the Threat Landscape: Traditional and AI-Specific Attacks:

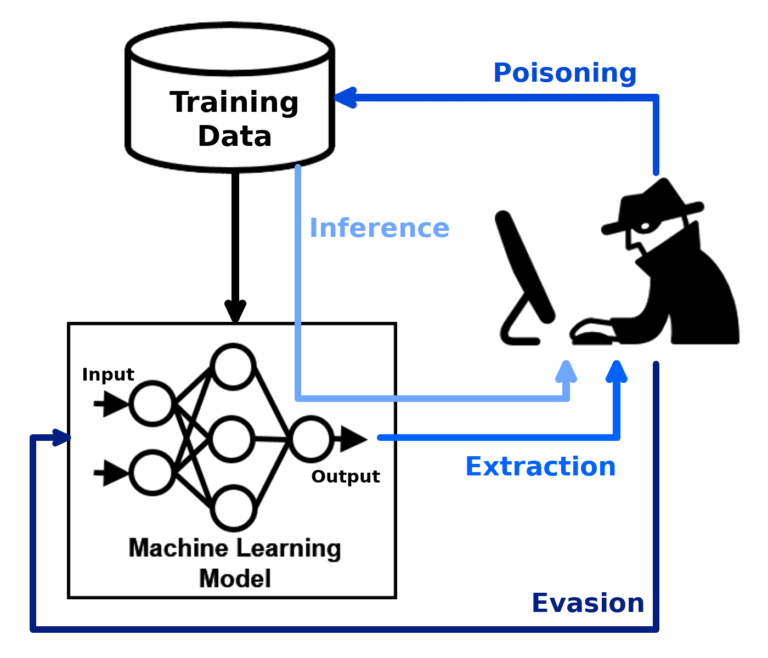

AI security threats can be broadly classified into two categories: traditional attacks and AI-specific attacks. Traditional attacks encompass familiar risks such as malware, phishing, and denial-of-service attacks, which can affect any system. However, it’s the AI-specific attacks that pose a unique challenge. These targeted assaults are meticulously designed to exploit the vulnerabilities inherent in AI systems, often employing innovative techniques, making detection and defense significantly more challenging.

AI-Specific Attacks: A Closer Look

- Data Poisoning: Corrupting the Foundation of AI Learning

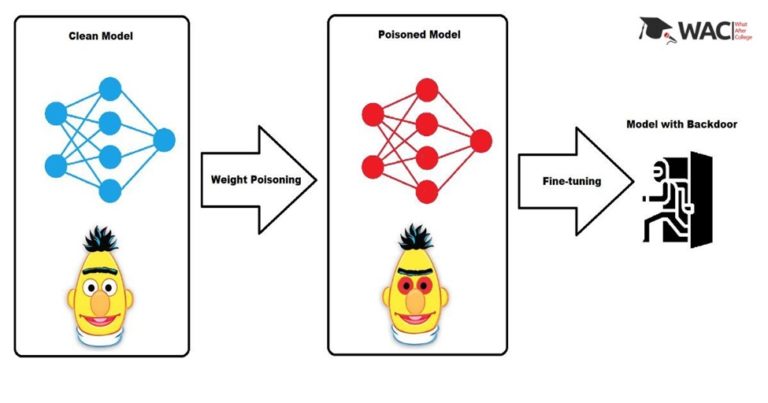

From misdiagnosing patients to misclassifying images, data poisoning is a stealthy and insidious attack that corrupts the foundation of AI learning. By injecting misleading data into the training dataset, attackers can subtly manipulate the AI system into making inaccurate predictions or deliberate malicious decisions. This form of manipulation can have devastating consequences in critical applications like medical diagnosis or financial forecasting.

For example, in 2018, researchers(1) demonstrated how data poisoning could be used to fool an image recognition system into misclassifying images of traffic signs. This attack could have potentially catastrophic consequences if it were used to target self-driving cars.

- Model Extraction: Unmasking the Theft of AI Intelligence

Another alarming threat is model extraction, where attackers attempt to steal the trained AI models themselves. Once extracted, these models can be replicated or used for malicious purposes, such as generating fake content, impersonating the original AI system, or even launching targeted attacks against other systems. The value of stolen AI models can be significant, making them targets for sophisticated criminal groups.

In 2021, researchers (2) from DeepMind demonstrated how to extract a large language model called GPT-3 from a cloud service provider. GPT-3 is a powerful language model that can generate realistic and coherent text. By extracting GPT-3, the researchers were able to create a copy of the model that they could use for their own purposes. This could have potentially serious consequences, as GPT-3 could be used to generate fake news, spread misinformation, or create other harmful content. Such an issue illustrates the danger of model extraction.

- Adversarial Examples: Deceptive Inputs and AI Vulnerabilities

Adversarial examples are meticulously crafted inputs designed to exploit the vulnerabilities of AI systems. These seemingly innocuous inputs, often undetectable by the human eye, can cause AI systems to make grave errors, leading to misclassified images, incorrect financial predictions, and even malfunctions in self-driving cars – with potentially catastrophic consequences.

A striking example of the power of adversarial examples can be found in the realm of image recognition. In a study by Google AI (3), researchers demonstrated how adding carefully crafted noise to images of traffic signs could fool an AI system into misidentifying them. The noise, which was imperceptible to the human eye, consisted of small patterns or alterations to pixel values. When fed images with this noise, the AI system would misclassify them with high confidence, potentially leading to accidents if used against self-driving cars. This is one example of many that underscores the urgent need for robust defense mechanisms against adversarial examples. As AI systems become increasingly pervasive and integrated into critical infrastructure, the development of effective defenses will be crucial to ensuring their safety and reliability.

Fortifying AI Systems: A Multifaceted Approach

Protecting AI systems from these threats necessitates a multifaceted strategy. Ensuring the security of the data used for AI training is paramount—this involves implementing measures to prevent unauthorized access, modification, or poisoning of the data. Similarly, safeguarding the AI models themselves against illicit access and alterations is crucial. Equally important is securing the infrastructure used for deploying AI systems to thwart unauthorized access, a step often overlooked in the pursuit of AI security.

Here are few actionable guidance for AI Security that can help protecting AI system from such threats:

- Using Secure Data: Data is the lifeblood of AI systems, and its security is paramount. Implementing a combination of data governance practices, access control mechanisms, and data encryption techniques ensures that training and operational data remain confidential, tamper-proof, and resilient to attacks. Regular data quality checks and anomaly detection algorithms further strengthen data integrity.

- Ensuring AI Intellectual Property (APIP) and protecting Models: AI models are intellectual assets with significant value. To safeguard them, a combination of access control mechanisms, encryption techniques, and model compression or obfuscation techniques can be employed. These measures make it more difficult for attackers to extract, reverse engineer, or tamper with the models. Additionally, recent advancements in federated learning and privacy-preserving machine learning techniques offer promising approaches for protecting AI models while enabling collaboration and knowledge sharing.

- Monitoring and Alert Systems: Continuous monitoring of AI systems is crucial to detect anomalous behavior, performance deviations, or signs of potential attacks. Alert systems should be in place to notify security personnel promptly, enabling rapid response and mitigation efforts. The use of anomaly detection algorithms and machine learning techniques can further enhance monitoring effectiveness. Recent advances in explainable AI (XAI) techniques can also provide valuable insights into AI model behavior, making it easier to identify and address potential anomalies.

- Secure Infrastructure: The infrastructure upon which AI systems operate is equally important to protect. Network security, endpoint security, and application security measures should be deployed to safeguard the underlying infrastructure from unauthorized access, intrusions, and cyberattacks. Segmentation and access control mechanisms should restrict access to sensitive components, while intrusion detection and prevention systems should be in place for proactive defense. Recent advancements in zero-trust architecture and cloud security solutions can provide comprehensive protection for AI infrastructure.

- Training AI Systems for Robustness: To prepare AI systems for adversarial attacks and manipulation, they should be trained on a diverse and robust dataset, incorporating examples of adversarial inputs. Additionally, adversarial training techniques can be employed to expose the system to simulated attacks, enhancing its ability to withstand and identify adversarial examples.

- Continuous Security Assessments: Regularly conduct security assessments of AI systems to identify and address vulnerabilities. Stay updated on the latest AI security threats and trends to adapt defense strategies accordingly.

Long Closing section: Forging the Future of AI through Harmonizing Security and Innovation

In the ever-evolving tapestry of technology, the symphony of Artificial Intelligence plays a pivotal role, promising boundless possibilities and transformative innovations. Yet, within this dynamic landscape, the crescendo of progress is met with the haunting undertones of security vulnerabilities. As we stand at the crossroads of innovation and risk, the journey towards securing AI systems emerges as an imperative quest, not just for architects and engineers but for the collective guardianship of our digital future.

AI security is more than a safeguard; it is the sentinel that watches over the ethical compass of technological advancement. Beyond the intricate dance with traditional threats lie the shadows of bespoke adversarial assaults—data poisoning, model extraction, and the subtle manipulation of AI’s cognitive senses. To navigate this intricate maze, our commitment to fortification must be as dynamic as the threats themselves.

The roadmap to resilient AI security extends beyond encryption and firewalls. It is a holistic expedition that intertwines the sanctity of data, the fortification of intellectual treasures, vigilant monitoring, and the harmonious collaboration of minds. The echoes of our diligence reverberate not only through the circuits of individual systems but resonate across the societal landscape, shaping the narrative of trust and reliability.

In this symposium of vigilance, each stride towards securing AI is a testament to our commitment to a future where technology empowers without compromise. It is a promise to the generations yet to come—a promise that the fruits of artificial intelligence will be borne from a garden of safety, responsibility, and ethical stewardship.

As I pen the final notes of this article, let us not merely applaud the gravity of the challenge but embrace the potential within it. Through the relentless pursuit of knowledge, collaboration, and adaptive resilience, we sculpt a future where AI is not just a marvel of innovation but a testament to our unwavering dedication to safeguarding the very essence of progress. The symphony of Artificial Intelligence shall endure, not as a vulnerability to be feared but as a beacon illuminating the path towards a secure, responsible, and harmonious coexistence with our technological creations.

Resources:

(1) Y Lin, T Pang, C Shen, Q Zhang, H Liu. “Poisoning Attacks on Adversarial Training Causes Strange and Unexpected Behavior”, 2018 IEEE Security and Privacy Conference (SP)

(2) B Recht, D Schroeder and R Wexler. “Extracting GPT-3 from Cloud Services: A Threat to AI Security and Privacy”, 2022 IEEE Symposium on Security and Privacy (SP).

(3) Szegedy, Christian, et al. “Intriguing properties of neural networks.” arXiv preprint arXiv:1312.6055 (2013).”

(4) Defense Advanced Research Projects Agency (DARPA): https://www.darpa.mil/

(5) National Security Agency (NSA): https://www.nsa.gov/

(6) National Institute of Standards and Technology (NIST): https://www.nist.gov/

(7) Google AI: https://ai.googleblog.com/

(8) OpenAI: https://openai.com/

(9) Microsoft Research: https://www.microsoft.com/en-us/research/