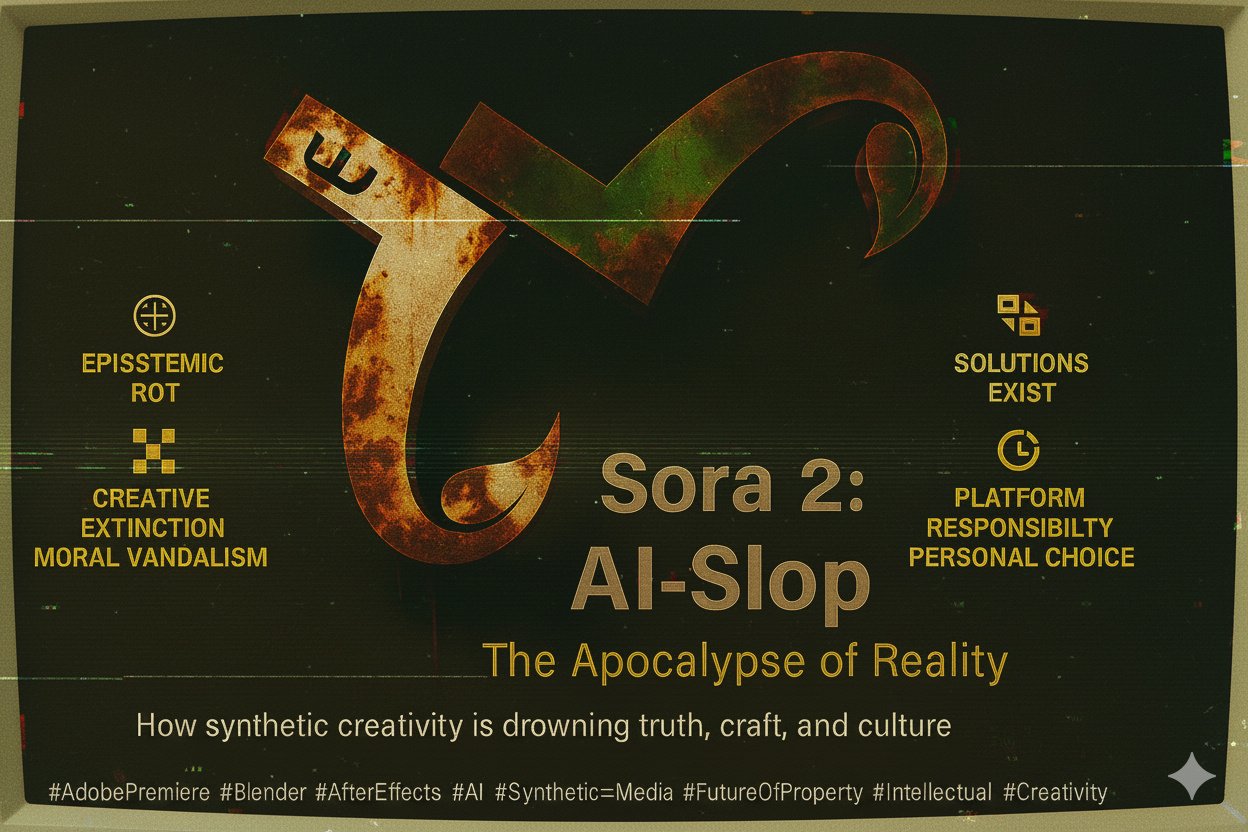

What happens when every video, voice, and face you see is fake, and no one cares anymore?

#Sora 2 isn’t just another AI app; it’s the dawn of the AI-Slop apocalypse.

How synthetic creativity is drowning truth, craft, and culture

Ten years ago, a platform where every video was fake sounded like absurd science fiction. Today it is ordinary life. Sora 2 allows anyone to appear anywhere, rewrite voices, and alter the past with a few lines of text. What was once a creative experiment has turned into a global factory of illusions that floods social feeds with endless synthetic material. The result is not a creative revolution but a slow erosion of meaning.

Real creators who spent years learning #AdobePremiere, #Blender, or #AfterEffects now compete with machines that can generate clips in seconds. Algorithms reward speed, novelty, and constant output rather than skill or truth. The more people post, the less anything lasts. What used to be a craft is being replaced by an automated loop that produces attention instead of art.

Copyright conflicts are multiplying. Film studios and record labels are suing AI companies for using protected data to train their models. Musicians and illustrators accuse developers of copying their style and voice without consent. Writers and performers’ unions are demanding new contract clauses to guarantee pay and protection from replacement. These are not theoretical concerns. They are early signs of a system that absorbs human creativity and turns it into training material with no credit or reward.

Governments are trying to respond but progress is uneven. A few laws now require platforms to label synthetic media, remove harmful deepfakes, and disclose how training data was collected. Yet legislation moves slowly while these models evolve daily. By the time one rule is enforced, new tools already exist to bypass it. The imbalance between innovation and protection keeps widening.

Sora 2’s deepfake and #cameo features go beyond entertainment. They rewrite memory and history. People can place themselves inside real events or alter public speeches to say what they never said. The danger is not only deception but the quiet destruction of shared evidence. When every image can be fabricated, the foundation of trust collapses.

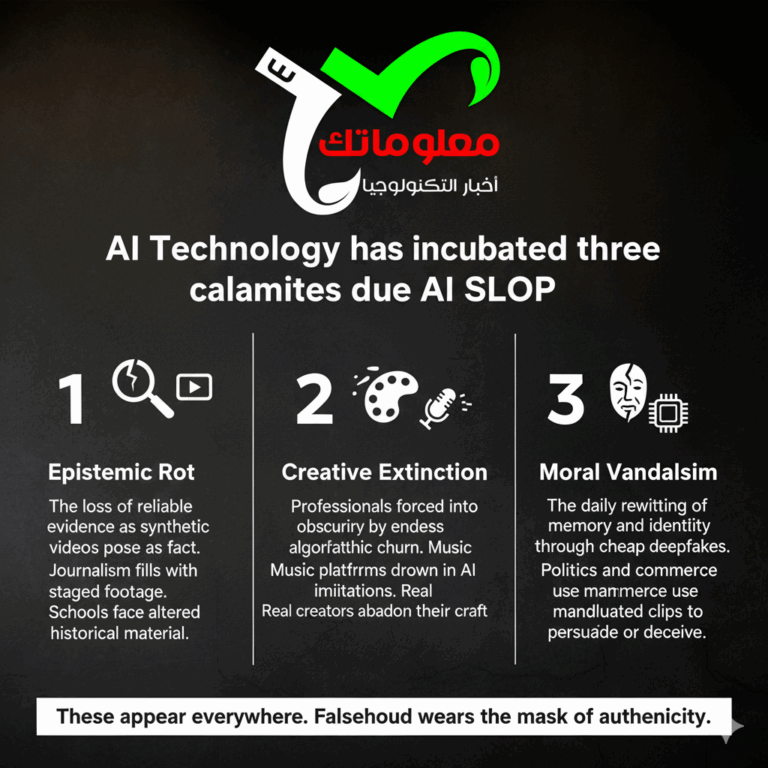

Technology has already seeded at least three calamities; First, epistemic rot: the loss of reliable evidence as synthetic clips circulate as fact. Second, creative extinction: skilled professionals forced into invisibility by algorithmic churn. Third, moral vandalism: the everyday rewriting of memory and identity through cheap deepfakes. These are visible across fields. Journalism faces fake eyewitness videos. Music platforms overflow with imitations of real artists. Education risks teaching altered history. Political feeds run on manipulated clips. Advertising exploits cloned faces for false endorsements. The harm compounds because falsehood now looks authentic while genuine creators are buried beneath limitless replicas.

The promise of “democratized creativity” hides a deep inequality. Most of the new content comes not from individuals but from automated farms that flood platforms with templated clips. The skill that matters is no longer storytelling but prompt engineering. Those who master the algorithm dominate visibility. Those who rely on traditional craft disappear. The attention economy rewards speed and repetition, not substance or care.

Behind these trends are human losses. A filmmaker who invested years in cameras and postproduction sees their work outperformed by AI videos made in minutes. A singer’s cloned voice is used in ads they never approved. Artists who once shaped digital culture now watch it reshaped without them.

Solutions exist but require deliberate action. Platforms can label synthetic content, share profits when likeness or data are used, and design friction to limit automated spam. Lawmakers can strengthen creators’ rights to control and license their digital identity. Training datasets should be transparent so that creators know how their work is used. Unions can negotiate digital rights and fair royalties for AI-assisted reuse. These steps are technical but essential if art is to survive the flood.

There is still hope in personal choice. People can decide what to share and reward authenticity instead of noise. They can pause before forwarding a fake clip, support verified creators, and prefer depth over speed. Sora 2 and tools like it are mirrors that reveal the kind of world we are building. If we surrender that mirror to convenience, truth in media may soon become nostalgia.

The machines didn’t steal creativity, we outsourced it for the comfort of speed. The real battle is no longer human versus machine but conscience versus complacency. The age of AI-slop isn’t approaching; it’s already devouring the spaces where truth once lived. Every click, every lazy share, every mindless scroll, every prompt that replaces artistry is a quiet surrender. If this continues, authenticity will erode slowly, painfully, layer by layer, until all that remains are hollow cameos. AI will not end art; apathy will. This is the final test of reality, and only those who still care enough to create honestly will stand out against the rising ocean of noise.